Introduction

Pig is a Hadoop extension that simplifi es Hadoop programming by

giving you a high-level data processing language while keeping Hadoop’s simple

scalability and reliability. Yahoo , one of the heaviest user of Hadoop (and a

backer of both the Hadoop Core and Pig), runs 40 percent of all its Hadoop jobs

with Pig.

Twitter is also another well-known user of Pig.

Pig enables data workers to write complex data

transformations without knowing Java. Pig’s simple SQL-like scripting language

is called Pig Latin.

Pig is complete, so you can do all required

data manipulations in Apache Hadoop with Pig.

Pig can invoke code in many languages like

JRuby, Jython and Java. You can also embed Pig scripts in other languages.

Pig works with data from many sources, including structured and

unstructured data, and store the results into the Hadoop Data File System.

Pig has two major components:

- A high-level data processing language called Pig Latin .

- A compiler that compiles and runs your Pig Latin script in a choice of evaluation mechanisms.

The main evaluation mechanism is Hadoop. Pig also

supports a local mode for development purposes.

Pig simplifies programming because of the ease of

expressing your code in Pig Latin.

Thinking like a Pig

Pig has a certain

philosophy about its design. We expect ease of use, high performance, and

massive scalability from any Hadoop subproject. More unique and crucial to

understanding Pig are the design choices of its programming language (a data

flow language called Pig Latin), the data types it supports, and its treatment

of user-defined functions (UDFs ) as first-class citizens.

Data types

We can summarize Pig’s philosophy toward data types in its slogan of “Pigs eat anything.”

Input data can come in any

format. Popular formats, such as tab-delimited text files, are natively

supported. Users can add functions to support other data file formats as well.

Pig doesn't require metadata or schema on data, but it can take advantage of them

if they’re provided.

Pig can operate on

data that is relational, nested, semi structured, or unstructured.

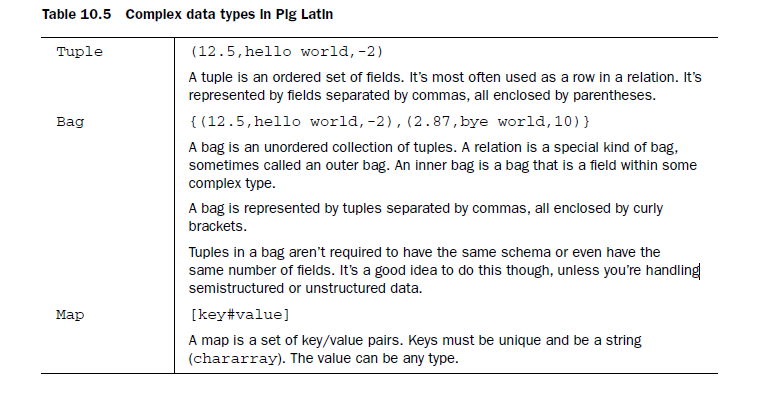

To support this

diversity of data, Pig supports complex data types, such as bags and tuples

that can be nested to form fairly sophisticated data structures.

User-defined functions

Pig was designed with many applications in mind—processing log data, natural language processing, analyzing network graphs, and so forth. It’s expected that many of the computations will require custom processing.

Knowing how to write

UDFs is a big part of learning to use Pig.

Basic Idea of Running Pig

We can run Pig Latin

commands in three ways—via the Grunt interactive shell, through a script file, and

as embedded queries inside Java programs. Each way can work in one of two modes—local

mode and Hadoop mode . (Hadoop mode is sometimes called Mapreduce mode in the

Pig documentation.)

You can think of Pig

programs as similar to SQL queries, and Pig provides a PigServer class that

allows any Java program to execute Pig queries.

Running Pig in Hadoop mode means the compile Pig program

will physically execute in a Hadoop installation. Typically the Hadoop installation

is a fully distributed cluster.

The execution mode is specified to the pig command via the -x or -exectype

option.

You can enter the Grunt shell in local mode through:

pig -x local

Entering the Grunt shell in Hadoop mode is

pig -x mapreduce

or use the pig command without arguments, as it chooses the Hadoop mode by default.

Expressions and functions

You can apply expressions and functions to data fields to compute various values.

Summary

Pig is a higher-level data processing layer on top of Hadoop. Its Pig Latin language provides programmers a more intuitive way to specify data flows.

It supports schemas in processing structured data, yet it’s flexible enough to work

with unstructured textor semistructured XML data. It’s extensible with the use of

UDFs.

It vastly simplifies data joining and job chaining—two aspects of MapReduce programming that many developers found overly complicated. To demonstrate its usefulness, our example of computing patent cocitation shows a complex MapReduce program written in a dozen lines of Pig Latin.

It vastly simplifies data joining and job chaining—two aspects of MapReduce programming that many developers found overly complicated. To demonstrate its usefulness, our example of computing patent cocitation shows a complex MapReduce program written in a dozen lines of Pig Latin.

No comments:

Post a Comment